News Story

UMD Researchers to Untangle Language Problems for Tongue-Tied Stroke Survivors

UMD researchers posit that the timing of operations for language production is misaligned in individuals with agrammatic aphasia. Like the chocolates that Lucy and Ethel attempt to prepare for distribution in an iconic “I Love Lucy” episode, nouns arrive at the area of the brain dedicated to articulatory planning but pass by unserviced. Photo by Album/Alamy Stock Photo

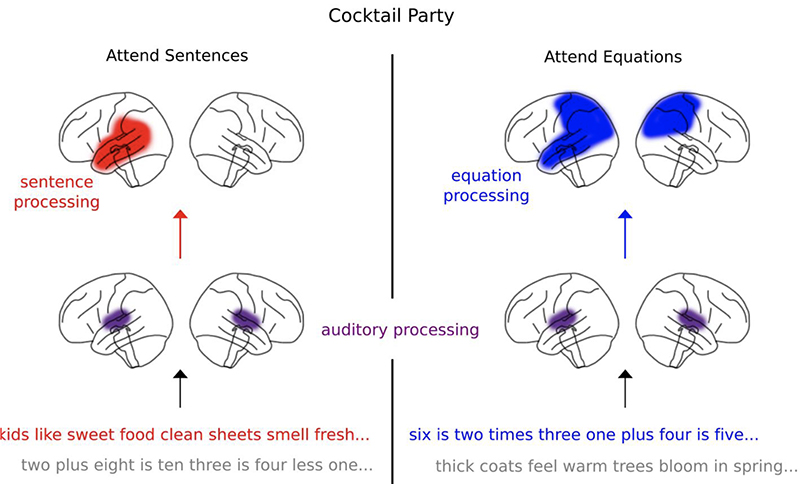

If you’ve ever started talking without knowing exactly how you’ll finish a sentence, then you’re familiar with the conveyor belt-like process of the human brain producing speech: Your brain finds the correct words, arranges them in order, then sends signals to the mouth and tongue to speak. As the first words of a sentence cross your lips, your brain is simultaneously finding new words, arranging them, and so on.

The delicate coordination of these operations can occasionally go awry for anyone. For survivors of stroke in particular, it can be much more serious.

Funded by a new five-year, $3.1 million grant from the National Institutes of Health, two University of Maryland researchers are investigating the timing of the neural processes required for spoken language production and their disruption in agrammatic aphasia, a common post-stroke disorder.

The collaboration between Yasmeen Faroqi-Shah, a professor of hearing and speech sciences, and L. Robert Slevc, an associate professor of psychology, employs magnetoencephalography (MEG) brain imaging to record neural activity during speech and parse the signals associated with the discrete operations for verbal expression.

“Working in aphasia is often detective-work science, because language breakdowns don’t care what your model of language is,” said Slevc. “Individuals with aphasia come in with a set of symptoms, and then you try work to uncover just what’s going on. In this study, we are drilling down on both the symptoms and past theoretical work to test a new model of how language breaks down. This will provide insights that will help us reconceptualize how language production works more broadly.”

Agrammatic aphasia is a particularly disruptive and socially debilitating disorder. People with it appear to get stuck on individual words and pause frequently or use filler sounds like “umm” or “ahh.” The result is a string of painstaking nouns spoken at the pace of one word every two seconds.

At the same time, agrammatic aphasic individuals have little trouble with language comprehension. The disorder is not an intellectual one. They know what they would like to say but find themselves simply unable. (For an example of agrammatic aphasia, watch an interview with stroke survivor Mike Caputo. When asked what it feels like to have agrammatic aphasia, he replies, “Brain is good, but speech, words—yuck.”)

The symptoms of agrammatic aphasia lead to social and financial hardships, said Faroqi-Shah, who has worked with stroke victims for nearly 25 years. Agrammatic aphasic persons are acutely aware of their condition and aggressively self-monitor to try to avoid using only nouns to express themselves. “But then they tend not to speak that much,” she said, “so their social life diminishes and they cannot work. Often, individuals with agrammatic aphasia end up on their couch just watching TV. We want to help them break out of that.”

The study by Faroqi-Shah and Slevc proposes a new model of agrammatic aphasia that challenges 40 years of scholarship. The disorder has always been viewed as a syntax problem—that is, as an inability to place words in a sentence in the appropriate order. But if this were the case, Faroqi-Shah explained, people with agrammatic aphasia should still be able to use words at the same rate as typical speakers. She concluded that there must also be an issue with the part of the brain that signals what movements are necessary for articulating words.

“There’s a bottleneck,” she said. “Individuals with agrammatic aphasia have essentially lost their sequencer, so a sentence’s words, syntax and muscle movements for articulation do not coalesce at the right time.”

With support from a 2022 seed grant award from the Brain and Behavior Institute, Faroqi-Shah and Slevc equipped campus’ MEG scanner with the capability for studying language production. Typically, the signals given off by the movements of vocal muscles would overwhelm the ability of MEG to record a neural signal during speech. But by installing electromyography to measure muscular activity, Faroqi-Shah and Slevc can identify and subtract the signal given off by vocal muscles, isolating neural signals from those generated by physical movement when speaking.

Of the 150 MEG laboratories in the world, UMD’s is now one of only three with the capability to study language production.

###

Writer: Nathaniel Underland, underlan@umd.edu

About the Brain and Behavior Institute: The mission of the BBI is to maximize existing strengths in neuroscience research, education and training at the University of Maryland and to elevate campus neuroscience through innovative, multidisciplinary approaches that expand our research portfolio, develop novel tools and approaches and advance the translation of basic science. A centralized community of neuroscientists, engineers, computer scientists, mathematicians, physical scientists, cognitive scientists and humanities scholars, the BBI looks to solve some of the most pressing problems related to nervous system function and disease.

Published August 23, 2023